Walk-thru additional changes required to support very large arrays using an Actuarial Factor Table Lookup example.

Overview

Increasing the size of a COBOL Array can seem easy once you get the hang of it. But, what if the array size needs to be very large? In this case, there may be a few additional challenges.

Use Case

Joint & Survivor Actuarial Factor tables can be very large. A two-way table lookup for a large range of annuitant and survivor ages can include thousands of entries.

Consider three different actuarial factor table examples for this discussion:

- Medium – Using whole annuitant ages from 20 to 100 and whole survivor ages from 1 to 100. This table would include 81 annuitant age increments and 100 survivor age increments for a total of 8100 entries.

- Large – Using quarterly (i.e. 60.00, 60.25, 60.50, 60.75, 70.00) annuitant ages from 35 to 85 and quarterly survivor ages from 18 to 85. This table would include 201 annuitant age Increments x 269 survivor age increments for a total of 54069 entries.

- Very Large – Using monthly (i.e. 60.00, 60.083333, 60.166667,60.25) annuitant ages from 45 to 75 and monthly survivor ages from 45 to 75. This table would include 361 annuitant age increments and 361 survivor age increments for a total of 130,321 entries.

For this discussion we’ll be considering the following topics:

- Array Increase Alternatives

- Standard Array Increase Methodology

- Special Maximum Data Space Limitations

- Special Dynamic SQL Counter Size Limitations

- Special Index Variable Size Limitations

- Performance Optimization

Array Increase Alternatives

There are several alternative methods to implementing actuarial factors that would not require increasing the COBOL Array size.

Reduce Overall Age Ranges or Age Increments

Consider reducing the number of entries by limiting the age ranges or age increments. The delivered array size for the number of entries in a Table Lookup table is 1000. This would fit a two-way lookup table with a 30-year whole age range for both annuitant and survivor. This table would include 31 annuitant age increments and 31 survivor age increments for a total of 961 entries.

Interpolation of Whole Age Factors

If greater accuracy is required for partial year ages, consider using a table lookup for both the whole age lower and whole age higher and then interpolating the results in a custom statement.

Break into Multiple Tables

Consider breaking the table into multiple tables with abbreviated age ranges, and then configure a custom statement to use the table lookup alias appropriate based on the annuitant’s age.

Replace Table Lookup Alias with Actuarial Factor Alias

Consider using an actuarial factor alias instead of a table lookup alias. This will compute the factor based on actuarial assumptions such as mortality factors and interest rates using delivered PeopleSoft Pension Administration functionality. Configuration and testing of actuarial factor functionality can be difficult, and specialized knowledge may be required to get results that exactly match actuarial factor tables published by the Actuary.

Standard Array Increase

Assuming the final requirement is that all possible ages and factors are stored in one table and referenced by a Table Lookup Alias, the next step is to follow the standard array increase procedure.

Sample Configuration

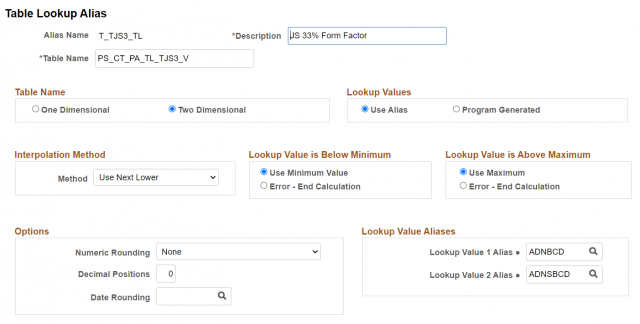

This is the Table Lookup Alias configuration for the example:

The table identified is a custom table/view that returns the actuarial factor data in the required format.

The actuarial factor data for the Medium, Large, or Very Large factor table examples can be loaded for testing.

Array Declaration

The delivered array declaration is a nested array of unique table lookup tables and all the related table rows.

A row is added to the W-LOOKUP-TABLES array for each unique Table Name referenced in any Table Lookup Aliases that needs to be resolved as the process executes. The maximum number of rows actually added to this array may be less than the unique table names included in all Table Lookup Aliases defined.

A row is added to the W-TL-TBL-VAL-COLS array for each row retrieved from the database table identified by the Table Name. The term COLS here might be easier to understand if it were ROWS – it’s the array of columns (COLS) that make up a row.

Here is how the array is defined as delivered, with space for 100 unique table names and 1000 lookup rows per table:

01 W-TABLES-FOR-LOOKUP.

03 W-MAX-TSFL-COL-CNT PIC 9(4) COMP VALUE 1000.

03 W-TFL-TBL-CNT PIC 9(4) COMP VALUE 0.

03 W-HLD-ROW-CNT PIC 9(4) COMP.

03 W-LOOKUP-TABLES OCCURS 100 TIMES

INDEXED BY TSFLIDX.

05 W-TL-TBL-NAME PIC X(18).

05 W-TL-TBL-ROW-CNT PIC 9(4) COMP.

05 W-TL-TBL-YCYCL-NUM PIC 9(4) COMP.

05 W-TL-TBL-VAL-COLS OCCURS 1000 TIMES

INDEXED BY COLIDX

COLIDXLO

COLIDXHI.

NOCLN 07 TL-1ST-COL.

NOCLN 09 TL-1ST-COL-DEC-VAL PIC 9(9)V9(6).

NOCLN 09 TL-1ST-COL-DATE-VAL PIC X(10).

NOCLN 07 TL-2ND-COL.

NOCLN 09 TL-2ND-COL-DEC-VAL PIC 9(9)V9(6).

NOCLN 09 TL-2ND-COL-DATE-VAL PIC X(10).

NOCLN 09 TL-2ND-COL-CHAR-VAL PIC X(01).

NOCLN 07 TL-3RD-COL.

NOCLN 09 TL-3RD-COL-DEC-VAL PIC 9(9)V9(6).

NOCLN 09 TL-3RD-COL-DATE-VAL PIC X(10).

NOCLN 09 TL-3RD-COL-CHAR-VAL PIC X(01).Array Size Estimation

The absolute maximum number of unique Table Lookup tables are found with this simple query:

select distinct table_name from ps_pa_tbl_lookup;Note that this result includes all tables, not just tables used during a process, and may be greater than the actual maximum number of unique Table Lookup tables encountered in actual processes, particularly if the Table Lookup is segregated by Plans that are not usually processed together in one process.

The number of rows in the Table Lookup table is found with this simple query:

select count('x') from ps_ct_pa_tl_tjs1_v;Array Size Increase – Medium

First, increase the array size to accommodate the Medium actuarial table example with 8,100 entries:

. . .

* 03 W-MAX-TSFL-COL-CNT PIC 9(4) COMP VALUE 1000.

03 W-MAX-TSFL-COL-CNT PIC 9(4) COMP VALUE 8200.

. . .

* 05 W-TL-TBL-VAL-COLS OCCURS 100 TIMES

05 W-TL-TBL-VAL-COLS OCCURS 8200 TIMES

. . . Testing this change, after compilation, passes successfully. So a reasonable array size increase does not encounter any additional challenges.

Array Size Increase – Large

Next, increase the array size to accommodate the Large actuarial table example with 54,069 entries:

. . .

* 03 W-MAX-TSFL-COL-CNT PIC 9(4) COMP VALUE 1000.

03 W-MAX-TSFL-COL-CNT PIC 9(4) COMP VALUE 54100.

. . .

* 05 W-TL-TBL-VAL-COLS OCCURS 100 TIMES

05 W-TL-TBL-VAL-COLS OCCURS 54100 TIMES

. . . For this scenario, a “Data space too large” error message is returned by the compiler.

Maximum Data Space Limitations

A maximum memory size can be allocated to a COBOL data definition. When exceeded the following message is returned by the compiler:

/u01/psoft/tools/pt85613/setup/pscbl_mf.mak : Compiling PAPUALTL.cbl ...

368 09 TL-3RD-COL-CHAR-VAL PIC X(01).

* 16-S************************************************* ( 0)**

** Data space too large

cob64: error(s) in compilation: PAPUALTL.cbl

So, 100 Tables with 54100 Rows exceeds this data space limitation.

The number and size of the data fields included in the Rows impact the total memory required. In this case, there are not many fields, but the numeric fields are not compressed, so maybe compressing the fields will reduce the overall memory requirement and fit under the data space size limitation:

05 W-TL-TBL-VAL-COLS OCCURS 54100 TIMES

INDEXED BY COLIDX

COLIDXLO

COLIDXHI.

NOCLN 07 TL-1ST-COL.

NOCLN * 09 TL-1ST-COL-DEC-VAL PIC 9(9)V9(6).

NOCLN 09 TL-1ST-COL-DEC-VAL PIC 9(9)V9(6) COMP-3.

NOCLN 09 TL-1ST-COL-DATE-VAL PIC X(10).

NOCLN 07 TL-2ND-COL.

NOCLN * 09 TL-2ND-COL-DEC-VAL PIC 9(9)V9(6).

NOCLN 09 TL-2ND-COL-DEC-VAL PIC S9(9)V9(6) COMP-3.

NOCLN 09 TL-2ND-COL-DATE-VAL PIC X(10).

NOCLN 09 TL-2ND-COL-CHAR-VAL PIC X(01).

NOCLN 07 TL-3RD-COL.

NOCLN * 09 TL-3RD-COL-DEC-VAL PIC 9(9)V9(6).

NOCLN 09 TL-3RD-COL-DEC-VAL PIC S9(9)V9(6) COMP-3.

NOCLN 09 TL-3RD-COL-DATE-VAL PIC X(10).

NOCLN 09 TL-3RD-COL-CHAR-VAL PIC X(01).

This change alone was not enough to fit under the data space size limitation.

The number of tables is included in the data space limitation. The test implementation includes far fewer than 100 unique Table Lookup tables. So the Table array can be decreased:

. . .

* 03 W-MAX-TSFL-TBL-CNT PIC 9(4) COMP VALUE 100.

03 W-MAX-TSFL-TBL-CNT PIC 9(4) COMP VALUE 25.

. . .

* 03 W-LOOKUP-TABLES OCCURS 100 TIMES

03 W-LOOKUP-TABLES OCCURS 25 TIMES

. . .This change was enough to decrease the memory requirement of the data definition below the data space size limitation.

Testing this change, after successful compilation, returns a new error related to the size of the sequence number variable used during the execution of the dynamic SQL used to retrieve the table values.

Dynamic SQL Table Lookup Sequence Size Limitation

The following error message was returned in the application trace output:

SQL FETCH

In Pgm Section : SQLRT: DA000-FETCH

With Return Code: 01455

Error Message : ORA-01455: converting column overflows integer datatypeThe dynamic SQL used to retrieve the actuarial factor table values, as found in the COBOL SQL trace, works just fine and does not return an error:

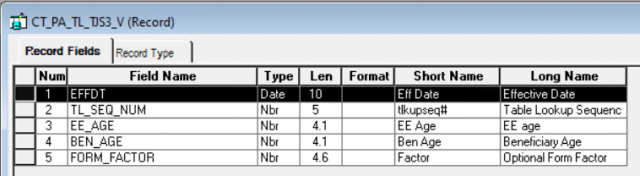

COM Stmt=SELECT TL_SEQ_NUM,EE_AGE,BEN_AGE,FORM_FACTOR

FROM PS_CT_PA_TL_TJS3_V

WHERE EFFDT = (SELECT MAX(EFFDT) FROM PS_CT_PA_TL_TJS3_V WHERE EFFDT <= :1)

ORDER BY TL_SEQ_NUM

Bind-1, type=SQLPDTE, length=10, value=2023-02-01The COBOL code for the setup of select variables for the dynamic SQL allows for only a small integer value to be retrieved. A small integer can hold values between -32,768 and 32,767. A small integer variable is not large enough to hold the maximum value of 54,069 we expect as the sequence number for the last row of the actuarial factor table. So, increase the variable size in the dynamic SQL setup as below:

******************************************************************

* *

EY100-SELECT-SETUP SECTION.

*

*****************************************************************

. . .

*

* SETUP TO READ TL SEQUENCE NUMBER

*

* MOVE 2 TO SETUP-LENGTH OF SLCTS OF DYSQL

MOVE 4 TO SETUP-LENGTH OF SLCTS OF DYSQL

(SETUP-COUNT OF SLCTS OF DYSQL)

* SET SETUP-TYPE-SMALLINT OF SLCTS OF DYSQL (SETUP-COUNT

SET SETUP-TYPE-INT OF SLCTS OF DYSQL (SETUP-COUNT

OF SLCTS OF DYSQL) TO TRUE

CALL 'PTPSETAD' USING SETUP-DATA-PTR OF SLCTS OF DYSQL

(SETUP-COUNT OF SLCTS OF DYSQL),

SLCT-TL-SEQ-NUM

. . .The field SLCT-TL-SEQ-NUM is already defined as large enough to hold the 54,069 value:

SLCT-TL-SEQ-NUM PIC S9(05) COMP.This field is not used anywhere else in the program.

Testing again, after these changes and a successful compile, was successful. The table lookup alias returned the correct actuarial factor for the annuitant and survivor ages.

Index Variable Size Limitations

The only three indexes used to reference these table values are COLIDX, COLIDXHI, and COLIDXLO defined using the INDEXED BY clause. These index values should be large enough to handle an even larger table such as in our Very Large example with 130,321 entries.

After the array sized above is increased above 130,320 and adjustments made for the data space size, the Large actuarial table example should work successfully.

However, in many other cases indexes are defined as follows:

10 W-IDX PIC 9(04) VALUE 0 COMP.For arrays that are referenced by indexes like the one defined above, the index size would be limited to 65,536 elements, or 32,767 if the variable was defined as signed, like PIC S9(04). There may cause problems in the program if the COBOL Array size is increased above these limits. When variables like these are increment above the limit, like 65536 + 1, the variable starts again at zero. This could generate unexpected results or introduce a loop.

Performance Optimization

The Table Lookup module (PAPUALTL) loads all rows from the lookup table. Then it searches through the rows looking for the appropriate result based on the Table Lookup Alias configuration and in some cases the Program Generated date.

An advantage of loading the large tables could be faster performance when many values are looked up in the table – such as when running a process for a large group list.

For fewer lookups, or even in the large group process case, constructing a dynamic SQL statement that retrieves the individual record with the result table lookup value might execute faster. The challenge to changing this design would be creating a SQL appropriate for any combination of configuration options for any supported database platform, and that’s probably why the current design was used.

Leave a Reply

You must be logged in to post a comment.